Sprint Backlog 10

Sprint Mission

- Implement party related ref data

Stories

Active

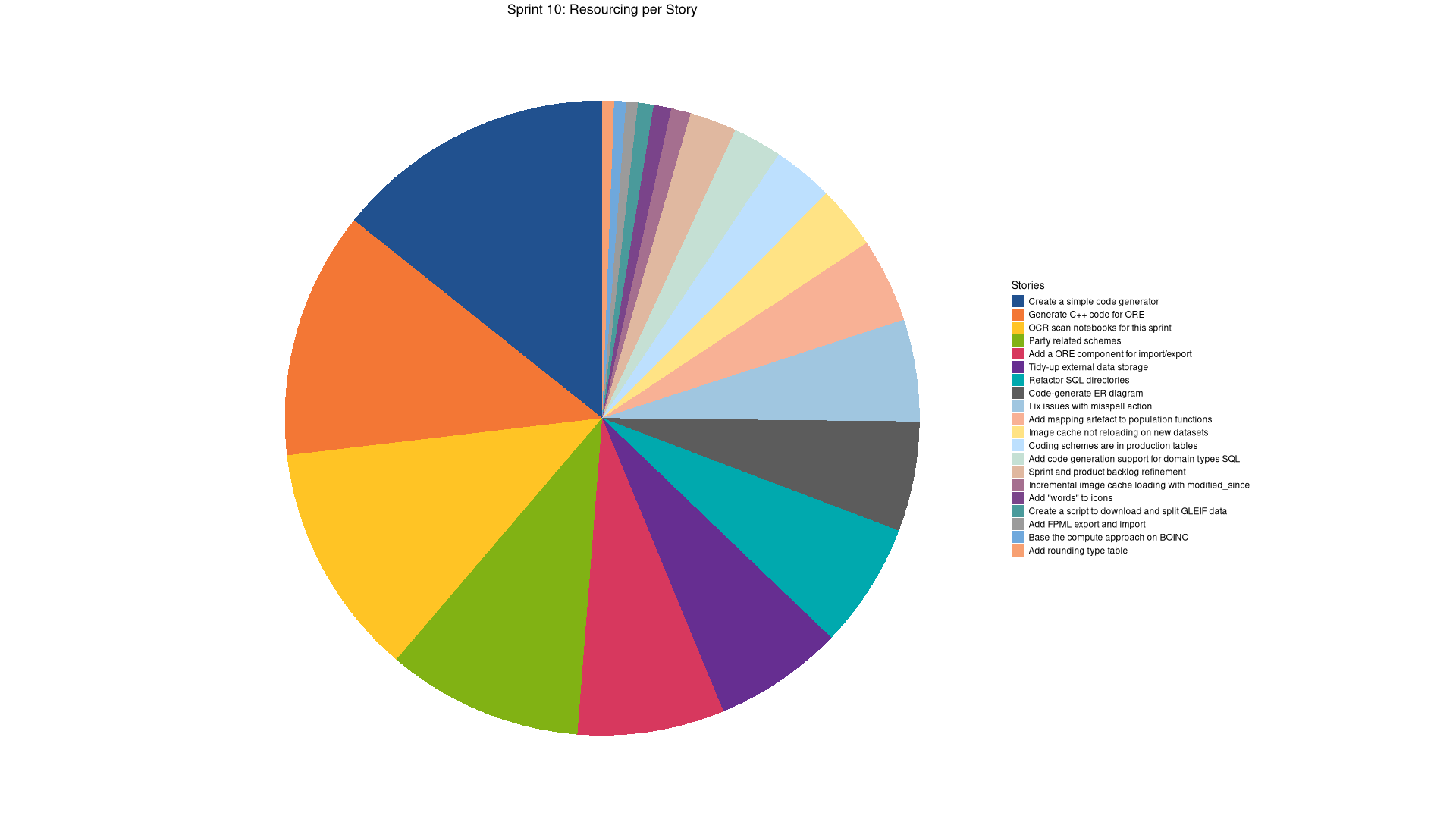

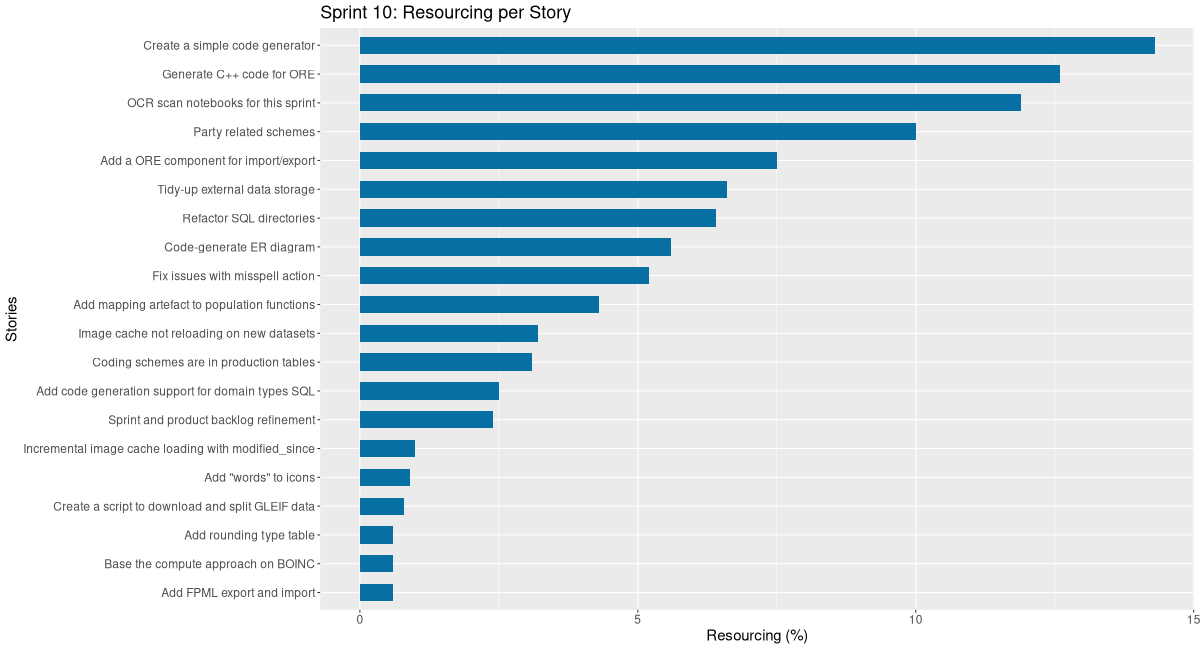

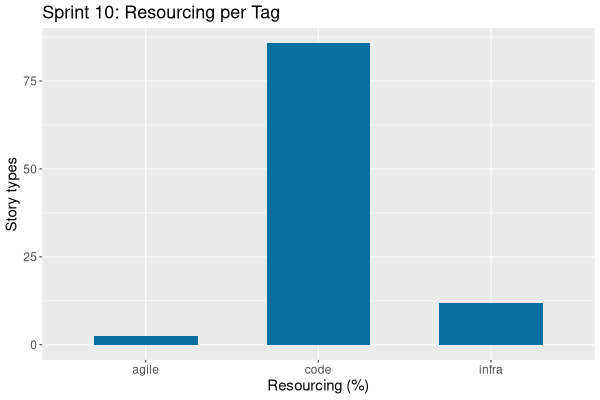

| Tags | Headline | Time | % | ||

|---|---|---|---|---|---|

| Total time | 1:30 | 100.0 | |||

| Stories | 1:30 | 100.0 | |||

| Active | 1:30 | 100.0 | |||

| code | Generate C++ code for ORE | 1:10 | 77.8 | ||

| code | Add rounding type table | 0:20 | 22.2 |

| Tags | Headline | Time | % | ||

|---|---|---|---|---|---|

| Total time | 53:25 | 100.0 | |||

| Stories | 53:25 | 100.0 | |||

| Active | 53:25 | 100.0 | |||

| agile | Sprint and product backlog refinement | 1:17 | 2.4 | ||

| infra | OCR scan notebooks for this sprint | 6:20 | 11.9 | ||

| code | Create a simple code generator | 7:38 | 14.3 | ||

| code | Party related schemes | 5:20 | 10.0 | ||

| code | Image cache not reloading on new datasets | 1:43 | 3.2 | ||

| code | Create a script to download and split GLEIF data | 0:25 | 0.8 | ||

| code | Incremental image cache loading with modified_since | 0:31 | 1.0 | ||

| code | Fix issues with misspell action | 2:47 | 5.2 | ||

| code | Tidy-up external data storage | 3:33 | 6.6 | ||

| code | Coding schemes are in production tables | 1:39 | 3.1 | ||

| code | Refactor SQL directories | 3:24 | 6.4 | ||

| code | Code-generate ER diagram | 3:01 | 5.6 | ||

| code | Add "words" to icons | 0:28 | 0.9 | ||

| code | Add mapping artefact to population functions | 2:19 | 4.3 | ||

| code | Add a ORE component for import/export | 4:00 | 7.5 | ||

| code | Generate C++ code for ORE | 6:43 | 12.6 | ||

| code | Add code generation support for domain types SQL | 1:20 | 2.5 | ||

| code | Add FPML export and import | 0:19 | 0.6 | ||

| code | Base the compute approach on BOINC | 0:18 | 0.6 | ||

| code | Add rounding type table | 0:20 | 0.6 |

STARTED Sprint and product backlog refinement agile

Updates to sprint and product backlog.

COMPLETED OCR scan notebooks for this sprint infra

We need to scan all of our finance notebooks so we can use them with AI. Each sprint will have a story similar to this until we scan and process them all.

COMPLETED Create a simple code generator code

We keep running out of claude code tokens. One way out of it is to generate the basic infrastructure via code generation and then use claude to fix any issues.

COMPLETED Add party identifier scheme entity code

The following is the analysis for adding support to party schemes.

List of schemes to seed in SQL:

- party identifier scheme: LEI

- Name: Legal Entity Identifier

- URI: http://www.fpml.org/coding-scheme/external/iso17442

- Description: Legal Entity Identifier (ISO 17442, 20-char alphanumeric). Global standard for legal entities.

- party scheme: BIC

- Name: Business Identifier Code

- URI: http://www.fpml.org/coding-scheme/external/iso9362

- Description: Business Identifier Code (SWIFT/BIC, ISO 9362). Used for banks and financial institutions.

- party scheme: MIC

- Name: Market Identifier Code

- URI: http://www.fpml.org/coding-scheme/external/iso10383

- Description: Market Identifier Code (ISO 10383). Identifies trading venues (e.g., XNYS, XLON). Note: Technically a venue, but often linked to party context in trade reports.

- party scheme: CEDB

- Name: CFTC Entity Directory

- URI: http://www.fpml.org/coding-scheme/external/cedb

- Description: CFTC Entity Directory (US-specific). Used in CFTC swap data reporting for non-LEI entities.

- party scheme: Natural Person

- Name: Natural Person

- URI: http://www.fpml.org/coding-scheme/external/person-id

- Description: Generic identifier for individuals (e.g., employee ID, trader ID). Not standardized; value interpreted contextually.

- party scheme: National ID

- Name: National Identifier

- URI: http://www.fpml.org/coding-scheme/extern/national-id

- Description: National identifiers (e.g., passport number, tax ID, SIREN, ORI, national ID card). Covers MiFID II “client identification” requirements.

- party scheme: Internal

- name: Internal

- URI: http://www.fpml.org/coding-scheme/external/party-id-internal

- Description: Proprietary/internal system identifiers (e.g., client ID in your OMS, CRM, or clearing system).

- party scheme: ACER Code

- name: EU Agency for Energy Regulation

- URI: http://www.fpml.org/coding-scheme/external/acer-code

- Description: ACER (EU Agency for Energy Regulation) code. Required for REMIT reporting by non-LEI energy market participants. Officially supported in FpML energy extensions.

- party scheme: DTCC Participant ID

- Name: Depository Trust & Clearing Corporation Participant ID

- URI:

- Description: DTCC Participant ID: A unique numeric identifier (typically 4–6 digits) assigned by the Depository Trust & Clearing Corporation (DTCC) to member firms authorized to participate in U.S. clearing and settlement systems, including DTC, NSCC, and FICC. Used in post-trade processing, trade reporting, and regulatory submissions in U.S. capital markets.

- party scheme: AII / MPID

- Name: Alternative Trading System (ATS) Identification Indicator / Market Participant ID

- Description: Commonly referred to as MPID – Market Participant Identifier; AII stands for ATS Identification Indicator, a term used in FINRA contexts. A four-character alphanumeric code assigned by FINRA (Financial Industry Regulatory Authority) to broker-dealers and alternative trading systems (ATSs) operating in U.S. equities markets. Used to identify the originating or executing firm in trade reports (e.g., in OATS, TRACE, or consolidated tape reporting). MPIDs are required for all SEC-registered trading venues and participants in U.S. equity and options markets.

COMPLETED Party related schemes code

We need to implement these before we can implement party:

- entity type

- party role

- local jurisdiction

- business center

- person role

- account types

- entity classifications

- local jurisdictions

- party relationships

- party roles

- person roles

- regulatory corporate sectors

- reporting regimes

- supervisory bodies

This pull request introduces a robust and automated system for managing FPML reference data within the platform. It significantly upgrades the codegen capabilities to parse FPML XML, generate comprehensive SQL database structures, and create scripts for populating both staging and production tables. The changes also streamline the data publication process by embedding key operational metadata directly into dataset definitions, moving towards a more configurable and less hard-coded approach for data management.

Highlights:

- FPML Reference Data Workflow: Implemented a complete Data Quality (DQ) artefact workflow for 15 FPML reference data entity types, encompassing 18 distinct datasets.

- SQL Schema Generation: Added automated SQL schema generation for FPML entities, including bi-temporal tables, notification triggers, and dedicated artefact staging tables.

- Image Linking: Introduced functionality to link business centres to country flags, enhancing visual representation within the data.

- Architecture Refinement: Refactored SQL generation to produce individual files per entity/coding-scheme instead of concatenated scripts, improving modularity and maintainability.

- Dataset Metadata Enhancement: Extended dataset records to directly store target_table and populate_function details, enabling more dynamic and flexible data publication.

COMPLETED Image cache not reloading on new datasets code

When we publish images, the image cache does not reload.

COMPLETED Create a script to download and split GLEIF data code

We need some data to drive the new Party tables. We can get GLEIF data for this. The dataset is too large, so we need to obtain a representative subset.

Links:

COMPLETED Incremental image cache loading with modified_since code

The ImageCache currently clears all cached images and re-fetches everything on reload. This is inefficient when only a few images have changed. We should track the last load time and only fetch images that have been modified since then.

The image domain already has a recorded_at timestamp field that tracks when

each image was last modified.

Required changes:

- Protocol (

assets_protocol.hpp):- Add

std::optional<std::chrono::system_clock::time_point> modified_sinceparameter tolist_images_request. - Add

recorded_atfield toimage_infostruct in the response.

- Add

- Server handler:

- Update the list_images handler to filter images where

recorded_at >modified_since= when the parameter is provided. - Return all images when

modified_sinceis not set (current behaviour).

- Update the list_images handler to filter images where

- Client ImageCache (

ImageCache.hpp/cpp):- Add

last_load_time_member to track when images were last loaded. - On

reload(), don't clear caches; instead sendmodified_sincewith last load time. - Only fetch and update images that were returned (changed since last load).

- Update

last_load_time_after successful load.

- Add

Acceptance criteria:

- When publishing an image dataset, only changed images are fetched.

- UI refresh is minimized (no flashing for unchanged images).

- Full reload still possible (e.g., on first load or explicit cache clear).

- Protocol is backward compatible (server handles missing

modified_since).

COMPLETED Fix issues with misspell action code

FPML and LEI data is breaking misspell action. Need to find a way to ignore folders.

This was complicated in the end because we relied on GLM 4.7, but it got very confused and kept suggesting approaches that were not very useful. In the end, fixed it manually.

COMPLETED Tidy-up external data storage code

At present we have external data all over the place, making it hard to understand what's what. We need to consolidate it all under https://github.com/OreStudio/OreStudio/blob/main/external.

This pull request introduces a significant refactoring of how reference data is managed and generated within the project. By reorganizing data into domain-specific directories, implementing manifest files for better source tracking, and developing code generators, the changes aim to streamline the process of maintaining and updating reference data. The relocation of external data and the introduction of master include files further enhance the system's structure and clarity, ultimately improving the robustness and maintainability of the data population process.

Highlights:

- Reference Data Reorganization: The populate directory has been restructured into domain-specific subdirectories, including fpml/, crypto/, flags/, and solvaris/, to improve organization and modularity.

- Manifest Files Introduction: New manifest.json files have been added for external data sources (flags, crypto) and internal solvaris data, providing a centralized way to manage data sources.

- Code Generator Creation: Dedicated code generators have been developed for flags, crypto, and solvaris metadata SQL, automating the generation of SQL files from source data.

- External Data Relocation: External data has been moved to a new 'external/' directory, accompanied by proper methodology documentation to clarify data sourcing and usage.

- Master Include Files: Master include files (e.g., fpml.sql, crypto.sql, flags.sql, solvaris.sql) have been added for each domain, simplifying the inclusion of related SQL scripts.

- Populate Ordering Fixes: The ordering of data population has been adjusted to resolve existing dataset dependency issues, ensuring correct data loading.

COMPLETED Coding schemes are in production tables code

We need to add datasets for coding schemes. At present they are going directly into production tables.

This pull request significantly refactors the management of ISO and FpML coding schemes by integrating them into a robust Data Quality (DQ) artefact pipeline. This change standardizes the process for handling external reference data, ensuring greater consistency and control over data publication. It introduces new database structures and functions to support a more governed lifecycle for these critical datasets, allowing for previewing changes and flexible update strategies, while maintaining direct insertion for specific internal party schemes.

Highlights:

- Standardized Coding Scheme Management: ISO and FpML coding schemes are now processed through a dedicated Data Quality (DQ) artefact pipeline, moving away from direct database insertions. This ensures a more consistent and governed approach to managing external reference data.

- Enhanced Data Governance: The new pipeline supports preview, upsert, insert-only, and replace-all modes for publishing coding schemes, offering greater control and consistency over data updates and lifecycle management.

- New Schema Components: A new staging table, dq_coding_schemes_artefact_tbl, has been introduced for temporary storage of coding schemes. Additionally, new functions dq_preview_coding_scheme_population() and dq_populate_coding_schemes() are provided to manage the publication process to production.

- Automated Generation and Dependencies: Code generators for ISO and FpML have been updated to produce artefact inserts for the new pipeline. Dependencies for ISO countries and currencies now correctly link to the new iso.coding_schemes dataset, ensuring proper ordering during data loading.

- Exclusion of Party Schemes: Certain party-related coding schemes (e.g., LEI, BIC, MIC) will continue to be handled via direct inserts, acknowledging their distinct, manually-defined nature and keeping them separate from the new pipeline.

COMPLETED Refactor SQL directories code

At present we have all files in one directory. As we add more tables it's becoming quite hard to follow.

Notes:

- user ores must be a variable.

- Restructure create, drop/teardown and update documentation

This pull request significantly refactors the SQL project's structure and database management processes. The primary goal is to enhance the organization of SQL creation scripts into logical components, improve the safety and auditability of database teardown operations, and update documentation to reflect these changes and provide clearer guidance. These changes streamline development workflows and reduce the risk of accidental data loss, particularly in production environments.

Highlights:

- Directory Structure Refactoring: The create/ directory has been reorganized from a flat structure into component-based subdirectories (e.g., iam/, dq/, refdata/, assets/), each with a master script to orchestrate its files.

- Enhanced Drop/Teardown Approach: The database teardown process has been restructured for improved traceability and safety. Production database drops now require explicit, reviewable SQL scripts generated by admin/admin_teardown_instances_generate.sql, moving away from pattern matching for critical databases. A centralized drop/drop_database.sql handles single database teardowns, and teardown_all.sql provides a comprehensive, confirmed cluster-wide cleanup.

- Documentation Cleanup and Updates: Outdated directory structure information has been removed from ores.sql.org and cross-references added to the SQL Schema Creator skill documentation. The ER diagram (ores_schema.puml) has been significantly updated to include 15 new refdata tables and 19 new data quality tables, reflecting a more complete schema.

- Stale Code Removal and Fixes: Thirteen stale entries have been removed from various drop scripts, and a redundant constraint drop in dq_tags_artefact_create.sql has been fixed, improving code cleanliness and efficiency.

- Refactor drop/ and populate/ directory structure

This pull request introduces a significant refactoring of the SQL script organization within the drop/ and populate/ directories. The changes aim to enhance modularity and maintainability by grouping related SQL files into component-specific subdirectories and establishing consistent naming conventions for master scripts. This restructuring simplifies navigation and management of database schema and data population scripts.

Highlights:

- Directory Structure Reorganization: The drop/ directory has been reorganized from a flat structure into component-based subdirectories, including assets, dq, geo, iam, refdata, seed, telemetry, utility, and variability.

- File Relocation and Git History Preservation: 56 SQL files have been moved into their respective new component subdirectories, with their git history preserved to maintain traceability.

- Consistent Naming Conventions: New component master files have been created with consistent naming patterns: drop_COMPONENT.sql and populate_COMPONENT.sql.

- Master Drop File Updates: The drop_all.sql file has been removed and replaced by drop/drop.sql, and drop_database.sql has also been relocated into the drop/ directory.

- Population Function Refactoring: The monolithic seed_upsert_functions_drop.sql has been split into individual per-component population function files (e.g., dq_population_functions_drop.sql, iam_population_functions_drop.sql), improving modularity.

- Populate Master File Alignment: All populate/ master files have been updated to align with the new consistent naming convention (populate_COMPONENT.sql).

- Refactor coding schemes and clean up ores.sql directory structure

This pull request significantly improves the maintainability and organization of the ores.sql project by standardizing directory structures and enforcing consistent naming conventions for administrative database objects. It also integrates FPML coding schemes into a more robust data quality pipeline, streamlining data management and generation processes. These changes enhance clarity, reduce technical debt, and ensure future scalability and ease of development.

Highlights:

- Coding Schemes Refactor: FPML coding schemes have been refactored to integrate with the DQ artefact pipeline, ensuring proper dataset management, fixing ordering and URI escaping, and consolidating codegen documentation.

- Directory Structure Reorganization: The projects/ores.sql directory has been cleaned up and reorganized. This includes removing deprecated/obsolete files, renaming the schema/ folder to create/, and introducing a utility/ folder for developer tools.

- Admin Database Object Naming Conventions: Admin database objects (files, functions, and views) now adhere to a consistent naming convention, using an admin_ prefix and _fn or _view suffixes where appropriate.

- C++ Code Updates: The C++ database_name_service has been updated to reflect the new admin_ prefixed SQL function names, ensuring compatibility with the refactored database utilities.

COMPLETED Code-generate ER diagram code

The diagrams are getting too out of line with the code, see if we can do a quick hack to code-generate them from source.

This pull request introduces a significant enhancement to the ores.codegen project by implementing an automated system for generating PlantUML Entity-Relationship diagrams directly from the SQL schema. This system not only creates visual representations of the database structure but also incorporates a comprehensive validation engine to enforce schema conventions, ensuring consistency and adherence to best practices. The new tooling streamlines documentation, reduces manual effort, and provides a single source of truth for the database design, making it easier for developers and stakeholders to understand and maintain the data model.

Highlights:

- Automated ER Diagram Generation: Introduced a new codegen system to automatically generate PlantUML Entity-Relationship (ER) diagrams directly from SQL CREATE/DROP statements.

- Comprehensive SQL Parsing: Developed a Python-based SQL parser (plantuml_er_parse_sql.py) capable of extracting detailed schema information including tables, columns, primary keys, unique indexes, and foreign key relationships.

- Intelligent Table Classification: The parser automatically classifies tables into categories like 'temporal', 'junction', and 'artefact' based on their column structure and naming conventions, applying appropriate stereotypes in the diagram.

- Convention Validation: Integrated a robust validation system that checks SQL schema against predefined naming, temporal table, column, drop script completeness, and file organization conventions, issuing warnings or failing builds in strict mode.

- Dynamic Relationship Inference: Relationships between tables are inferred based on foreign key column naming conventions and explicit references, supporting one-to-many and one-to-one cardinalities.

- Mustache Templating for Output: Utilizes a Mustache template (plantuml_er.mustache) to render the parsed JSON model into a human-readable and machine-processable PlantUML diagram format, including descriptions from SQL comments.

- Orchestration and Integration: A shell script (plantuml_er_generate.sh) orchestrates the parsing, model generation, and PlantUML rendering, with an optional step to generate PNG images. A separate validate_schema.sh script provides a dedicated validation entry point.

- Enhanced Documentation: A new doc/plans/er_diagram_codegen.md document outlines the objective, design principles, architecture, components, and implementation steps of the ER diagram generation system.

- SQL Schema Descriptions: Added descriptive comments to 22 existing SQL table definitions (projects/ores.sql/create/**/*.sql) to enrich the generated ER diagram with meaningful context.

COMPLETED Add "words" to icons code

To make the toolbar more readable, it would be nice if we could add some words to icons such as the icons for export and import:

- CSV

- ORE

- FPML

Then we could use 2 well-defined icons for import and export across the code-base.

COMPLETED Add mapping artefact to population functions code

At present this is hard-coded.

COMPLETED Add a ORE component for import/export code

As per FPML analysis, it is better to have all of the export/import code in one place rather than scattered across other components. Add a component specific to ORE.

- Create component

This pull request establishes the groundwork for ORE (Open Source Risk Engine) data handling within the system. It introduces a dedicated ores.ore component, complete with its directory structure, CMake build configurations, and initial code stubs. The changes also ensure that the system's architectural documentation is up-to-date with the new component's presence and purpose, laying a solid foundation for future development of ORE import and export capabilities.

Highlights:

- New Component Introduction: A new component, ores.ore, has been added to the project, providing foundational structure for ORE (Open Source Risk Engine) specific import/export functionality.

- Build System Integration: The new ores.ore component is fully integrated into the CMake build system, including its source, modeling, and testing subdirectories.

- Documentation Updates: The system's architectural documentation, including PlantUML diagrams and the system model, has been updated to reflect the inclusion and details of the new ores.ore component.

- Initial Stub Implementation: Basic stub files for domain logic and testing have been created to ensure the component compiles and integrates correctly from the outset.

COMPLETED Generate C++ code for ORE code

It seems a bit painful to have to manually craft entities for parsing ORE and FPML code. Explore tooling in this area.

This pull request significantly upgrades the system's data handling capabilities by integrating xsdcpp for automated C++ type generation from XML schemas. This change not only modernizes the way ORE XML types are managed but also enhances the data quality publication process by introducing a dedicated artefact_type mechanism for managing target tables and population functions. Additionally, it standardizes SQL function naming and corrects currency rounding logic, leading to a more robust and maintainable codebase.

Highlights:

- XSDCPP Code Generation Integration: Introduced infrastructure to automatically generate C++ types from XSD schemas using the xsdcpp tool, streamlining the creation and maintenance of domain types for ORE XML configurations.

- Refactored ORE XML Types: Replaced manually crafted CurrencyConfig and CurrencyElement C++ types with automatically generated currencyConfig and currencyDefinition types, improving consistency and reducing manual effort.

- Enhanced Data Quality (DQ) Publication Infrastructure: Added a new artefact_type domain, entity, mapper, and repository to centralize the lookup of target_table and populate_function for dataset publication, removing these fields directly from the dataset entity.

- SQL Naming Convention Enforcement: Renamed all dq_populate_* SQL functions to follow a consistent dq_populate_*_fn suffix convention across the database schema.

- Currency Rounding Type Correction: Fixed invalid currency rounding types in the CURRENCY_DEFAULTS_POOL within generator.py and in SQL populate scripts, changing 'standard' and 'swedish' to 'Closest', and 'none' to 'Down' for commodity currencies.

Links:

- codesynthesis xsd: good but requires their own library at runtime and it's not on vcpg.

- codesynthesis xsde: good but requires their own library at runtime and it's not on vcpg.

- #11: Issues parsing XSD for ORE (Open Source Risk Engine): raised issue against xsdcpp project for ORE schema.

STARTED Add code generation support for domain types SQL code

Now that we got the basic codegen infrastructure working, we need to start code generating the SQL for domain types.

STARTED Add FPML export and import code

Where we can, we should start to add export and import functionality for FPML. This validates our modeling and catches aspects we may be missing.

Currency export:

<?xml version="1.0" encoding="utf-8"?> <referenceDataNotification xmlns="http://www.fpml.org/FpML-5/reporting" fpmlVersion="5-11"> <header> <messageId messageIdScheme="http://sys-a.gigabank.com/msg-id">SYNC-CURR-2026-001</messageId> <sentBy>GIGABANK_LEI_A</sentBy> <creationTimestamp>2026-01-25T10:00:00Z</creationTimestamp> </header> <currency> <currency currencyScheme="http://www.fpml.org/coding-scheme/external/iso4217">USD</currency> </currency> <currency> <currency currencyScheme="http://www.fpml.org/coding-scheme/external/iso4217">EUR</currency> </currency> </referenceDataNotification>

and:

<header> <messageId messageIdScheme="http://instance-a.internal/scheme">MSG_99821</messageId> <sentBy>INSTANCE_A</sentBy> <creationTimestamp>2026-01-25T14:30:00Z</creationTimestamp> </header>

- Create component

We need a component to place all of the common infrastructure. As per Gemini analysis:

To achieve FpML schema validation using libxml2, you need to treat the FpML schemas as a "Black Box" of rules. Since FpML is highly modular, you don't just validate against one file; you validate against a "Root" schema that imports the others.

Here is the most efficient path to implementing XML validation for your Identity Layer.

- Download the "Reporting" Schema Set

FpML is divided into "Views." For party and business unit data, you want the Reporting View.

- Go to FpML.org Download Section.

- Download the Reporting View Zip.

- Locate fpml-main-5-11.xsd. This is your entry point.

- The "Schema Repository" Pattern

Libxml2 requires all imported .xsd files to be reachable.

Place the downloaded XSDs in a folder (e.g., schemas/fpml-5-11).

In your code, you will point the xmlSchemaNewParserCtxt to the main file. Libxml2 will automatically follow the <xsd:import> tags to the other files in that directory.

- Creating a "Wrapper" for Partial Validation

FpML schemas are designed to validate full messages (like a Trade Notification), not just a standalone party table row. To validate just your parties, you have two choices:

Option A: The "Fragment" Approach (Easiest for testing)

Construct a minimal, valid FpML "Party Notification" message in memory that wraps your data.

XML <partyNotification xmlns="http://www.fpml.org/FpML-5/reporting"> <header> <messageId messageIdScheme="http://yourbank.com/msg-id">MSG001</messageId> <sentBy>YOURBANK_LEI</sentBy> <sendTo>VALIDATOR</sendTo> <creationTimestamp>2026-01-23T09:00:00Z</creationTimestamp> </header> <party> <partyId partyIdScheme="http://www.fpml.org/coding-scheme/external/iso17442">549300V5E66A3S316907</partyId> <partyName>GigaBank PLC</partyName> </party> </partyNotification>

These common components should go into

ores.fpml. We then need to figure out how to organise the exporters.This pull request introduces a new 'ores.fpml' component, designed to handle Financial Products Markup Language operations, complete with its basic structure for source code, tests, and modeling. Concurrently, it updates the 'Component Creator' skill guide documentation to ensure it accurately reflects the latest logging system architecture, providing clear instructions for integrating logging functionality and resolving potential compilation issues for new components.

Highlights:

- New ores.fpml Component: A new component for Financial Products Markup Language (FPML) operations has been added, including its source, tests, and modeling setup.

- Documentation Update for Logging: The 'Component Creator' skill guide documentation has been updated to reflect current logging system changes, specifically regarding the 'ores.logging.lib' dependency and the 'ores::logging' namespace.

- CMake Configuration for New Component: The main 'projects/CMakeLists.txt' has been updated to include the new 'ores.fpml' component, and its own CMake files are set up for building the library, tests, and PlantUML diagrams.

STARTED Base the compute approach on BOINC code

Copy the BOINC data model.

- Analysis work with Gemini

- Description: As a QuantDev team, we want to build a distributed compute grid to execute high-performance financial models (ORE, Ledger, LLM) across multiple locations. We will use PostgreSQL as the central orchestrator, leveraging PGMQ for task delivery and TimescaleDB for result telemetry.

- Architectural Components (BOINC Lexicon)

- Host (Node): The persistent infrastructure service running on the hardware. It manages system resources and the lifecycle of task slots.

- Wrapper: A transient, task-specific supervisor that manages the environment, fetches data via URIs, and monitors the engine.

- App Executable (Application): The core calculation engine (e.g., ORE Studio, llama.cpp) executed by the Wrapper.

- Workunit: The abstract definition of a problem, containing pointers to input data and configurations.

- Result: A specific instance of a Workunit assigned to a Host.

- Technical Stack:

- Database: PostgreSQL (Central State Machine).

- Queueing: PGMQ (for "Hot" result dispatching).

- Time-Series: TimescaleDB (for node metrics and assimilated financial outputs).

- Storage: Postgres with BYTEA column (for zipped input/output bundles). Let's stick with this sub-optimal approach until we hit some resource issues.

- Communication: Server Pool (Load-balanced API) to buffer nodes from the database.

To provide a robust, strictly-typed orchestration layer, we must implement the following entities within the PostgreSQL instance.

- 1. The Host (Node) Entity

Represents the physical or virtual compute resource.

Table:

hostsid: UUID (Primary Key).external_id: TEXT (User-defined name/hostname).location_id: INTEGER (FK to site/region table).cpu_count: INTEGER (Total logical cores).ram_mb: BIGINT (Total system memory).gpu_type: TEXT (e.g., 'A100', 'None').last_rpc_time: TIMESTAMPTZ (Last heartbeat from the Node).credit_total: NUMERIC (Total work units successfully processed).

- 2. The Application & Versioning (App Executable)

Defines the "What" – the engine being wrapped.

Table:

appsid: SERIAL (PK).name: TEXT (e.g., 'ORE_STUDIO').- Table:

app_versions id: SERIAL (PK).app_id: INTEGER (FK).wrapper_version: TEXT (Version of our custom wrapper).engine_version: TEXT (Version of the third-party binary).package_uri: TEXT (Location of the zipped Wrapper + App bundle).platform: TEXT (e.g., 'linux_x86_64').

- 3. The Workunit (Job Template)

The abstract problem definition. Does not contain results.

Table:

workunitsid: SERIAL (PK).batch_id: INTEGER (FK).app_version_id: INTEGER (FK).input_uri: TEXT (Pointer to zipped financial data/parameters).config_uri: TEXT (Pointer to ORE/Llama XML/JSON config).priority: INTEGER (Higher = sooner).target_redundancy: INTEGER (Default: 1. Set > 1 for volunteer/untrusted nodes).canonical_result_id: INTEGER (Nullable; updated by Validator).

- 4. The Result (Execution Instance)

The bridge between the DB and PGMQ.

Table:

resultsid: SERIAL (PK).workunit_id: INTEGER (FK).host_id: INTEGER (FK, Nullable until dispatched).pgmq_msg_id: BIGINT (The lease ID from PGMQ).server_state: INTEGER (1: Inactive, 2: Unsent, 4: In Progress, 5: Done).outcome: INTEGER (Status code: Success, Compute Error, Timeout).output_uri: TEXT (Where the Wrapper uploaded the result zip).received_at: TIMESTAMPTZ.

- 5. The Batch & Assimilator State

Handles the finance-specific "Batch" requirement and dependencies.

Table:

batchesid: SERIAL (PK).external_ref: TEXT (Link to Finance UI/Project ID).status: TEXT (Open, Processing, Assimilating, Closed).

Table:

batch_dependenciesparent_batch_id: INTEGER (FK).child_batch_id: INTEGER (FK).

Table:

assimilated_data(TimescaleDB Hypertable)time: TIMESTAMPTZ (Logical time of financial observation).batch_id: INTEGER (FK).metric_key: TEXT (e.g., 'portfolio_var').metric_value: NUMERIC.

- Notes

- Schema must enforce that a

Resultcannot be marked 'Success' without a validoutput_uri. - The

batch_statemust be dynamically computable via a view or updated via trigger to show % completion. - The

app_versionstable must support "side-by-side" versions for A/B testing risk engines.

- Schema must enforce that a

- Links

STARTED Add rounding type table code

Values:

- Up, Down, Closest, Floor, Ceiling. Maybe none?

Notes:

- Currency should check against these.

- Supply the mapping table to mappers?

Grok:

Your current list covers several of the most commonly used rounding behaviors in applications:

- Up → typically means always round away from zero (positive direction) — also called Round Up / Towards +∞ / Ceiling-like but always positive direction

- Down → always round toward zero (truncation) — also called Round Down / Truncation / Towards 0

- Closest → round to nearest (with some tie-breaking rule — usually unspecified/default in casual UIs)

- Floor → round down toward −∞ (always toward more negative)

- Ceiling → round up toward +∞ (always toward more positive)

These are already very solid for most general-purpose apps. However, depending on your audience (especially finance, accounting, statistics, or scientific users), you are likely missing one or two very frequently requested/expected options.

### Commonly missing but important ones to consider adding

Bankers (or Banker's Rounding / Round Half Even / Round Half to Even) → Round to nearest, but ties (exactly .5) round to the nearest even digit. This is the statistically unbiased method, required or strongly preferred in:

- Financial applications (interest calculations, totals)

- Many accounting systems

- IEEE 754 default for binary floating-point

- Java BigDecimal.HALF_EVEN, .NET MidpointRounding.ToEven, Python round(), SQL ROUND() in many databases

Very commonly expected when users see "Closest" is not enough.

Half Up (or Round Half Away from Zero / Commercial Rounding) → Round to nearest, ties (.5) always round away from zero (2.5 → 3, -2.5 → -3) This is what most people intuitively think "normal rounding" means in school and is the most common tie-breaking rule in non-financial UIs.

Many users expect this when they choose "Closest".

### Less common but occasionally requested

- Half Down — ties round toward zero (2.5 → 2, -2.5 → -2) — rare in business apps

- Half Toward Zero — same as Half Down

- Half Away from Zero — same as Half Up

- Half to Odd — ties round to odd digit (very rare)

### Suggested final list (modern, user-friendly set)

Most applications end up offering roughly these 5–7 options in a dropdown:

- Up (always away from zero / toward +∞ for positive numbers)

- Down (always toward zero / truncation)

- Floor (toward −∞)

- Ceiling (toward +∞)

- Closest / Nearest (usually implies Half Up in simple UIs)

- Half Up (explicitly — very popular)

- Bankers / Round Half Even / Banker's Rounding (critically important for finance/accounting)

A very clean and common 6-item list seen in many tools:

- Up

- Down

- Floor

- Ceiling

- Nearest, Half Up

- Nearest, Half Even (Bankers)

If you want to keep the list very short, adding just Bankers to your current five is already a big improvement for serious users.

FPML via Gemini:

FpML does not use a "Coding Scheme" (like a URL-referenced list) for rounding in the same way it does for Currencies or Business Centers. Instead, it uses a Complex Type called Rounding and a fixed Enumeration for the direction.

Because rounding logic is typically a structural part of a calculation (e.g., how to round a floating rate or a payout amount), it is "baked into" the schema itself rather than being an external lookup table.

- The Rounding Complex Type

To model rounding in your database or XML, you need two pieces of data:

- precision: An integer representing the number of decimal places.

- roundingDirection: A fixed value from the RoundingDirectionEnum.

Allowed Values for roundingDirection:

- Up: Round away from zero (or to the next higher number).

- Down: Round toward zero (truncate).

- Nearest: Standard mathematical rounding (0.5 rounds up).

Generate C++ code for FPML code

We need to fix any limitations we may have in xsdcpp.

Clicking save on connections causes exit code

Asks if we want to exit. Also clicking save several times creates folders with the same name.

Analysis on database name service code

Is this used? If so, the service should not be connecting to the admin database.

External data issues code

Problems observed:

- missing

downloaded_atfor a lot of data. - spurious

manifest.txt, we should only havemanifest.json. - duplication of data in catalog and main manifest. The manifest is the catalog. Remove duplication.

- for github downloads, add git commit.

- not clear who "owner" is. It has to map to an account or group in the system.

- datasets have a catalog, but they shoud be forced to use the catalog of the manifest:

"catalog": "Open Source Risk Engine",

- need a domain for data such as XML Schemas.

- we should ensure the methodology is one of the defined methodologies in the file.

- since datasets refer to data in subdirectories, we should add the directory to the manifest. Not needed in DB.

Check for uses of raw libpq code

Claude has the habit of sneaking in uses of raw libpq. We need to do a review of the code to make sure we are using sqlgen.

Notes:

- check for raw libpq in bitemporal operations.

ORE Sample Data code

We added examples and XSDs from ORE. We should consider some improvements to this dataset:

- remove unnecessary files (notebooks, pngs, pdfs, etc).

Make the icon theme "configurable" code

While we are trying to find a good icon theme, it should be possible to change the icons without having to rebuild. Ideally without having to restart, but if we have to restart that's not too bad.

Listener error in comms service code

Investigate this:

2026-01-22 20:40:20.194383 [DEBUG] [ores.comms.net.connection] Successfully wrote frame 2026-01-22 20:40:20.194413 [INFO] [ores.comms.net.server_session] Sent notification for event type 'ores.refdata.currency_changed' with 1 entity IDs to 127.0.0.1:49354 2026-01-22 20:40:21.698972 [ERROR] [ores.eventing.service.postgres_listener_service] Connection error while consuming input. 2026-01-22 20:40:21.699059 [INFO] [ores.eventing.service.postgres_listener_service] Listener thread stopped.

Implement party related entities at database level code

The first step of this work is to get the entities to work at the database schema level.

- Table Structure: party

Field Name Data Type Constraints Commentary party_idInteger PK, Auto-Inc Internal surrogate key for database performance and foreign key stability. tenant_idInteger FK (tenant) The "Owner" of this record. Ensures GigaBank's client list isn't visible to AlphaHedge. party_nameString(255) Not Null The full legal name of the entity (e.g., "Barclays Bank PLC"). short_nameString(50) Unique A mnemonic or "Ticker" style name used for quick UI displays (e.g., "BARC-LDN"). leiString(20) Unique/Null The ISO 17442 Legal Entity Identifier. Critical for regulatory reporting and GLEIF integration. is_internalBoolean Default: False Flag: If TRUE, this party represents a branch or entity belonging to the Tenant (The Bank). party_type_idInteger FK (scheme) Categorizes the entity: Bank, Hedge Fund, Corporate, Central Bank, or Exchange. postal_addressText Used for generating legal confirmations and settlement instructions. business_center_idInteger FK (scheme) Links to an FpML Business Center (e.g., GBLO, USNY). Determines holiday calendars for settlement. statusEnum Active/Inactive Controls whether trades can be booked against this entity. created_atTimestamp Audit trail for when the entity was onboarded.

Add party entity code

Party analysis.

| Field | Type | Description | Foreign Key Reference |

|---|---|---|---|

| party_id | UUID | Primary key (globally unique identifier) | — |

| full_name | TEXT | Legal or registered name | — |

| short code | TEXT | Short code for the party. | |

| organization_type | INT | Type of organization | → organization_type_scheme |

| parent_party_id | INT | References parent party (self-referencing) | → party_id (nullable) |

Add party identifier entity code

Allows a party to have multiple external identifiers (e.g., LEI, BIC).

| Field | Type | Description | Foreign Key Reference |

|---|---|---|---|

| party_id | UUID | References party.party_id | → party |

| id_value | TEXT | Identifier value, e.g., "549300…" | — |

| id_scheme | TEXT | Scheme defining identifier type, e.g. LEI | → party_id_scheme |

| Description | TEXT | Additional information about the party |

Primary key: composite (party_id, id_scheme)

Contact information entity code

Contact Information is a container that groups various ways to reach an entity.

Contact Information can be associated with either a Party (at the legal entity level) or a BusinessUnit (at the desk/operational level). To build a robust trading system, your database should support a polymorphic or flexible link to handle this.

The Logic of the Link:

- Link to Party: Used for Legal and Regulatory contact details. This is the "Head Office" address, the legal service of process address, or the general firm-wide contact for the LEI.

- Link to Business Unit: Used for Execution and Operational contact details. This is where your "Machine" or "Human" actually lives. It links the trader or algo to a specific desk's phone number, email, and—most importantly—its Business Center (Holiday Calendar).

- Type: Contact Information

This is the main container for how to reach a party or person.

- address (Complex): The physical location.

- phone (String): Multiple entries allowed (Work, Mobile, Fax).

- email (String): Electronic mail addresses.

- webPage (String): The entity's URL.

- Type: Address

The physical street address structure.

- streetAddress (Complex): Usually a list of strings (Line 1, Line 2, etc.).

- city (String): The city or municipality.

- state (String): The state, province, or region.

- country (Scheme): An ISO 3166 2-letter country code (e.g., US, GB).

- postalCode (String): The ZIP or Postcode.

Add a organisation type scheme entity code

Indicates a type of organization.

- Obtained on 2016-06-13

- Version 2-0

- URL: http://www.fpml.org/coding-scheme/organization-type-2-0.xml

- Code: MSP

- Name: Major Swap Participant

- Description: A significant participant in the swaps market, for example as defined by the Dodd-Frank Act.

- Code: NaturalPerson

- Name: Natural Person

- Description: A human being.

- Code: non-SD/MSP

- Name: Non Swap Dealer or Major Swap Participant

- Description: A firm that is neither a swap dealer nor a major swaps participant under the Dodd-Frank Act.

- Code: SD

- Name: Swap Dealer

- Description: Registered swap dealer.

Business unit entity code

Represents internal organizational units (e.g., desks, departments, branches). Supports hierarchical structure.

| Field | Type | Description | Foreign Key Reference |

|---|---|---|---|

| unit_id | INT | Primary key | — |

| party_id | UUID | Top-level legal entity this unit belongs to | → party |

| parent_business_unit_id | INT | References parent unit (self-referencing) | → business_unit.unit_id (nullable) |

| unit_name | TEXT | Human-readable name (e.g., "FX Options Desk") | — |

| unit_id_code | TEXT | Optional internal code or alias | — |

| business_centre | TEXT | Business centre for the unit | → business centre scheme |

business_centre may be null (for example, we may want to have global desk and then London desk.

Book and Portfolio entities code

Support a single, unified hierarchical tree for risk aggregation and reporting (Portfolios) while maintaining operational accountability and legal/bookkeeping boundaries at the leaf level (Books).

- Portfolio

Logical Aggregation Nodes. Represents organizational, risk, or reporting groupings. Never holds trades directly.

Field Type Description portfolio_id (PK) UUID Globally unique identifier. parent_portfolio_id UUID (FK) Self-referencing FK. NULL = root node. name TEXT Human-readable name (e.g., "Global Rates", "APAC Credit"). owner_unit_id INT (FK) Business unit (desk/branch) responsible for management. purpose_type ENUM 'Risk', 'Regulatory', 'ClientReporting', 'Internal'. aggregation_ccy CHAR(3) Currency for P&L/risk aggregation at this node (ISO 4217). is_virtual BOOLEAN If true, node is purely for on-demand reporting (not persisted in trade attribution). created_at TIMESTAMP Audit trail. Note: Portfolios do not have a legal_entity_id. Legal context is derived from descendant Books.

- Book

Operational Ledger Leaves. The only entity that holds trades. Serves as the basis for accounting, ownership, and regulatory capital treatment.

Field Type Description book_id (PK) UUID Globally unique identifier. parent_portfolio_id UUID (FK) Mandatory: Links to exactly one portfolio. name TEXT Must be unique within legal entity (e.g., "FXO_EUR_VOL_01"). legal_entity_id UUID (FK) Mandatory: References party.party_id (must be an LEI-mapped legal entity). ledger_ccy CHAR(3) Functional/accounting currency (ISO 4217). gl_account_ref TEXT Reference to external GL (e.g., "GL-10150-FXO"). May be nullable if not integrated. cost_center TEXT Internal finance code for P&L attribution. book_status ENUM 'Active', 'Closed', 'Frozen'. is_trading_book BOOLEAN Critical for Basel III/IV: distinguishes Trading vs. Banking Book. created_at TIMESTAMP For audit. closed_at TIMESTAMP When book_status = 'Closed'. Objectives:

- Strict separation: Portfolios = logical; Books = operational

- Legal ownership at Book level → critical for regulatory capital, legal netting, tax

- Hierarchy via parent_portfolio_id

- Trading vs. Banking book flag → Basel requirement

Hierarchy Integrity Constraints:

- Rule: A Portfolio must not directly contain another Portfolio and a Book at

the same level if that violates business policy.

- Enforce via application logic or DB constraint (e.g., CHECK that a Portfolio is either "container-only" or "leaf-container", but typically Portfolios can contain both sub-Portfolios and Books—this is normal).

- Cycle Prevention: Ensure no circular references (parent → child → parent). Use triggers or application validation.

- Multi-Legal Entity Support: Your model allows Books under the same Portfolio

to belong to different legal entities. Is this intentional?

- Allowed in some firms (for consolidated risk views).

- Forbidden in others (e.g., regulatory ring-fencing).

- Recommendation: Add a validation rule (application-level): If a Portfolio contains any Books, all descendant Books must belong to the same legal_entity_id.” Or, if mixed entities are allowed, flag the Portfolio as 'MultiEntity' in purpose_type.

- Trade Ownership: Explicitly state: Every trade must have a book_id (FK). No trade exists outside a Book. This is implied but should be documented as a core invariant.

- Lifecycle & Governance: Add version or valid_from/valid_to if Books/Portfolios

evolve over time (e.g., name changes, reorgs).

- Especially important for audit and historical P&L.

- Consider owner_person_id (trader or book manager) for Books.

- Naming & Uniqueness:

Enforce: (legal_entity_id, name) must be unique for Books.

- Prevents ambiguous book names like "RatesDesk" used by two entities.

- Book Closure Policy: When a Book is Closed, should existing trades remain?

- Yes (typical). But no new trades allowed.

- Your book_status covers this

Combined Hierarchy Rules (Refined):

Rule Description Leaf Invariant Only Books may hold trades. Portfolios are purely aggregators. Single Parent Every Book and non-root Portfolio has exactly one parent. Legal Entity Scope A Book declares its legal owner. A Portfolio’s legal scope is the union of its Books’ entities. Permissioning Trade permission → granted on book_id. View/Analyze permission → granted on portfolio_id (includes subtree) Accounting Boundary P&L, capital, and ledger entries are computed per Book, then rolled up through Portfolios in aggregation_ccy.

Add business centre scheme entity code

The following is the analysis for adding support to party schemes.

Note: add a foreign key to the country table, which may be null in some cases.

The coding-scheme accepts a 4 character code of the real geographical business calendar location or FpML format of the rate publication calendar. While the 4 character codes of the business calendar location are implicitly locatable and used for identifying a bad business day for the purpose of payment and rate calculation day adjustments, the rate publication calendar codes are used in the context of the fixing day offsets. The 4 character codes are based on the business calendar location some of which based on the ISO country code or exchange code, or some other codes. Additional business day calendar location codes could be built according to the following rules: the first two characters represent the ISO 3166 country code [https://www.iso.org/obp/ui/#search/code/], the next two characters represent either a) the first two letters of the location, if the location name is one word, b) the first letter of the first word followed by the first letter of the second word, if the location name consists of at least two words. Note: for creating new city codes for US and Canada: the two-letter combinations used in postal US states (http://pe.usps.gov/text/pub28/28apb.htm ) and Canadian provinces (http://www.canadapost.ca/tools/pg/manual/PGaddress-e.asp) abbreviations cannot be utilized (e.g. the code for Denver, United States is USDN and not USDE, because of the DE is the abbreviation for Delaware state ). Exchange codes could be added based on the ISO 10383 MIC code [https://www.iso20022.org/sites/default/files/ISO10383_MIC/ISO10383_MIC.xls] according to the following rules: 1. it would be the acronym of the MIC. If acronym is not available, 2. it would be the MIC code. If the MIC code starts with an 'X', 3. the FpML AWG will compose the code. 'Publication Calendar Day', per 2021 ISDA Interest Rate Derivatives Definitions, means, in respect of a benchmark, any day on which the Administrator is due to publish the rate for such benchmark pursuant to its publication calendar, as updated from time to time. FpML format of the rate publication calendar. The construct: CCY-[short codes to identify the publisher], e.g. GBP-ICESWAP. The FpML XAPWG will compose the code.

- Obtained on 2025-04-25

- Version 9-4

- URL: http://www.fpml.org/coding-scheme/business-center-9-4.xml

- Code: The unique string/code identifying the business center, usually a 4-character code based on a 2-character ISO country code and a 2 character code for the city, but with exceptions for special cases such as index publication calendars, as described above.

- Code: AEAB

- Description: Abu Dhabi, Business Day (as defined in 2021 ISDA Definitions Section 2.1.10 (ii))

- Code: AEAD

- Description: Abu Dhabi, Settlement Day (as defined in 2021 ISDA Definitions Section 2.1.10 (i))

- Code: AEDU

- Description: Dubai, United Arab Emirates

- Code: AMYE

- Description: Yerevan, Armenia

- Code: AOLU

- Description: Luanda, Angola

- Code: ARBA

- Description: Buenos Aires, Argentina

- Code: ATVI

- Description: Vienna, Austria

- Code: AUAD

- Description: Adelaide, Australia

- Code: AUBR

- Description: Brisbane, Australia

- Code: AUCA

- Description: Canberra, Australia

- Code: AUDA

- Description: Darwin, Australia

- Code: AUME

- Description: Melbourne, Australia

- Code: AUPE

- Description: Perth, Australia

- Code: AUSY

- Description: Sydney, Australia

- Code: AZBA

- Description: Baku, Azerbaijan

- Code: BBBR

- Description: Bridgetown, Barbados

- Code: BDDH

- Description: Dhaka, Bangladesh

- Code: BEBR

- Description: Brussels, Belgium

- Code: BGSO

- Description: Sofia, Bulgaria

- Code: BHMA

- Description: Manama, Bahrain

- Code: BMHA

- Description: Hamilton, Bermuda

- Code: BNBS

- Description: Bandar Seri Begawan, Brunei

- Code: BOLP

- Description: La Paz, Bolivia

- Code: BRBD

- Description: Brazil Business Day. This means a business day in all of Sao Paulo, Rio de Janeiro or Brasilia not otherwise declared as a financial market holiday by the Bolsa de Mercadorias & Futuros (BM&F). BRBD should not be used for setting fixing time, instead the city centers (e.g. BRBR, BRRJ, BRSP) should be used, because they are locatable places.

- Code: BSNA

- Description: Nassau, Bahamas

- Code: BWGA

- Description: Gaborone, Botswana

- Code: BYMI

- Description: Minsk, Belarus

- Code: CACL

- Description: Calgary, Canada

- Code: Covers

- Description: all New Brunswick province.

- Code: CAFR

- Description: Fredericton, Canada.

- Code: CAMO

- Description: Montreal, Canada

- Code: CAOT

- Description: Ottawa, Canada

- Code: CATO

- Description: Toronto, Canada

- Code: CAVA

- Description: Vancouver, Canada

- Code: CAWI

- Description: Winnipeg, Canada

- Code: CHBA

- Description: Basel, Switzerland

- Code: CHGE

- Description: Geneva, Switzerland

- Code: CHZU

- Description: Zurich, Switzerland

- Code: CIAB

- Description: Abidjan, Cote d'Ivoire

- Code: CLSA

- Description: Santiago, Chile

- Code: CMYA

- Description: Yaounde, Cameroon

- Code: CNBE

- Description: Beijing, China

- Code: CNSH

- Description: Shanghai, China

- Code: COBO

- Description: Bogota, Colombia

- Code: CRSJ

- Description: San Jose, Costa Rica

- Code: CWWI

- Description: Willemstad, Curacao

- Code: CYNI

- Description: Nicosia, Cyprus

- Code: CZPR

- Description: Prague, Czech Republic

- Code: DECO

- Description: Cologne, Germany

- Code: DEDU

- Description: Dusseldorf, Germany

- Code: DEFR

- Description: Frankfurt, Germany

- Code: DEHA

- Description: Hannover, Germany

- Code: DEHH

- Description: Hamburg, Germany

- Code: DELE

- Description: Leipzig, Germany

- Code: DEMA

- Description: Mainz, Germany

- Code: DEMU

- Description: Munich, Germany

- Code: DEST

- Description: Stuttgart, Germany

- Code: DKCO

- Description: Copenhagen, Denmark

- Code: DOSD

- Description: Santo Domingo, Dominican Republic

- Code: DZAL

- Description: Algiers, Algeria

- Code: ECGU

- Description: Guayaquil, Ecuador

- Code: EETA

- Description: Tallinn, Estonia

- Code: EGCA

- Description: Cairo, Egypt

- Code: ESAS

- Description: ESAS Settlement Day (as defined in 2006 ISDA Definitions Section 7.1 and Supplement Number 15 to the 2000 ISDA Definitions)

- Code: ESBA

- Description: Barcelona, Spain

- Code: ESMA

- Description: Madrid, Spain

- Code: ESSS

- Description: San Sebastian, Spain

- Code: ETAA

- Description: Addis Ababa, Ethiopia

- Code: EUR

- Description: -ICESWAP Publication dates for ICE Swap rates based on EUR-EURIBOR rates

- Code: EUTA

- Description: TARGET Settlement Day

- Code: FIHE

- Description: Helsinki, Finland

- Code: FRPA

- Description: Paris, France

- Code: GBED

- Description: Edinburgh, Scotland

- Code: GBLO

- Description: London, United Kingdom

- Code: GBP

- Description: -ICESWAP Publication dates for GBP ICE Swap rates

- Code: GETB

- Description: Tbilisi, Georgia

- Code: GGSP

- Description: Saint Peter Port, Guernsey

- Code: GHAC

- Description: Accra, Ghana

- Code: GIGI

- Description: Gibraltar, Gibraltar

- Code: GMBA

- Description: Banjul, Gambia

- Code: GNCO

- Description: Conakry, Guinea

- Code: GRAT

- Description: Athens, Greece

- Code: GTGC

- Description: Guatemala City, Guatemala

- Code: HKHK

- Description: Hong Kong, Hong Kong

- Code: HNTE

- Description: Tegucigalpa, Honduras

- Code: HRZA

- Description: Zagreb, Republic of Croatia

- Code: HUBU

- Description: Budapest, Hungary

- Code: IDJA

- Description: Jakarta, Indonesia

- Code: IEDU

- Description: Dublin, Ireland

- Code: ILJE

- Description: Jerusalem, Israel

- Code: ILS

- Description: -SHIR Publication dates of the ILS-SHIR index.

- Code: ILS

- Description: -TELBOR Publication dates of the ILS-TELBOR index.

- Code: ILTA

- Description: Tel Aviv, Israel

- Code: INAH

- Description: Ahmedabad, India

- Code: INBA

- Description: Bangalore, India

- Code: INCH

- Description: Chennai, India

- Code: INHY

- Description: Hyderabad, India

- Code: INKO

- Description: Kolkata, India

- Code: INMU

- Description: Mumbai, India

- Code: INND

- Description: New Delhi, India

- Code: IQBA

- Description: Baghdad, Iraq

- Code: IRTE

- Description: Teheran, Iran

- Code: ISRE

- Description: Reykjavik, Iceland

- Code: ITMI

- Description: Milan, Italy

- Code: ITRO

- Description: Rome, Italy

- Code: ITTU

- Description: Turin, Italy

- Code: JESH

- Description: St. Helier, Channel Islands, Jersey

- Code: JMKI

- Description: Kingston, Jamaica

- Code: JOAM

- Description: Amman, Jordan

- Code: JPTO

- Description: Tokyo, Japan

- Code: KENA

- Description: Nairobi, Kenya

- Code: KHPP

- Description: Phnom Penh, Cambodia

- Code: KRSE

- Description: Seoul, Republic of Korea

- Code: KWKC

- Description: Kuwait City, Kuwait

- Code: KYGE

- Description: George Town, Cayman Islands

- Code: KZAL

- Description: Almaty, Kazakhstan

- Code: LAVI

- Description: Vientiane, Laos

- Code: LBBE

- Description: Beirut, Lebanon

- Code: LKCO

- Description: Colombo, Sri Lanka

- Code: LULU

- Description: Luxembourg, Luxembourg

- Code: LVRI

- Description: Riga, Latvia

- Code: MACA

- Description: Casablanca, Morocco

- Code: MARA

- Description: Rabat, Morocco

- Code: MCMO

- Description: Monaco, Monaco

- Code: MNUB

- Description: Ulan Bator, Mongolia

- Code: MOMA

- Description: Macau, Macao

- Code: MTVA

- Description: Valletta, Malta

- Code: MUPL

- Description: Port Louis, Mauritius

- Code: MVMA

- Description: Male, Maldives

- Code: MWLI

- Description: Lilongwe, Malawi

- Code: MXMC

- Description: Mexico City, Mexico

- Code: MYKL

- Description: Kuala Lumpur, Malaysia

- Code: MYLA

- Description: Labuan, Malaysia

- Code: MZMA

- Description: Maputo, Mozambique

- Code: NAWI

- Description: Windhoek, Namibia

- Code: NGAB

- Description: Abuja, Nigeria

- Code: NGLA

- Description: Lagos, Nigeria

- Code: NLAM

- Description: Amsterdam, Netherlands

- Code: NLRO

- Description: Rotterdam, Netherlands

- Code: NOOS

- Description: Oslo, Norway

- Code: NPKA

- Description: Kathmandu, Nepal

- Code: NYFD

- Description: New York Fed Business Day (as defined in 2006 ISDA Definitions Section 1.9, 2000 ISDA Definitions Section 1.9, and 2021 ISDA Definitions Section 2.1.7)

- Code: NYSE

- Description: New York Stock Exchange Business Day (as defined in 2006 ISDA Definitions Section 1.10, 2000 ISDA Definitions Section 1.10, and 2021 ISDA Definitions Section 2.1.8)

- Code: NZAU

- Description: Auckland, New Zealand

- Code: New

- Description: Zealand Business Day (proposed effective date: 2025-10-06)

- Code: NZBD

- Description: New Zealand Business Day (proposed effective date: 2025-10-06)

- Code: NZWE

- Description: Wellington, New Zealand

- Code: OMMU

- Description: Muscat, Oman

- Code: PAPC

- Description: Panama City, Panama

- Code: PELI

- Description: Lima, Peru

- Code: PHMA

- Description: Manila, Philippines

- Code: PHMK

- Description: Makati, Philippines

- Code: PKKA

- Description: Karachi, Pakistan

- Code: PLWA

- Description: Warsaw, Poland

- Code: PRSJ

- Description: San Juan, Puerto Rico

- Code: PTLI

- Description: Lisbon, Portugal

- Code: QADO

- Description: Doha, Qatar

- Code: ROBU

- Description: Bucharest, Romania

- Code: RSBE

- Description: Belgrade, Serbia

- Code: RUMO

- Description: Moscow, Russian Federation

- Code: SAAB

- Description: Abha, Saudi Arabia

- Code: SAJE

- Description: Jeddah, Saudi Arabia

- Code: SARI

- Description: Riyadh, Saudi Arabia

- Code: SEST

- Description: Stockholm, Sweden

- Code: SGSI

- Description: Singapore, Singapore

- Code: SILJ

- Description: Ljubljana, Slovenia

- Code: SKBR

- Description: Bratislava, Slovakia

- Code: SLFR

- Description: Freetown, Sierra Leone

- Code: SNDA

- Description: Dakar, Senegal

- Code: SVSS

- Description: San Salvador, El Salvador

- Code: THBA

- Description: Bangkok, Thailand

- Code: TNTU

- Description: Tunis, Tunisia

- Code: TRAN

- Description: Ankara, Turkey

- Code: TRIS

- Description: Istanbul, Turkey

- Code: TTPS

- Description: Port of Spain, Trinidad and Tobago

- Code: TWTA

- Description: Taipei, Taiwan

- Code: TZDA

- Description: Dar es Salaam, Tanzania

- Code: TZDO

- Description: Dodoma, Tanzania

- Code: UAKI

- Description: Kiev, Ukraine

- Code: UGKA

- Description: Kampala, Uganda

- Code: USBO

- Description: Boston, Massachusetts, United States

- Code: USCH

- Description: Chicago, United States

- Code: USCR

- Description: Charlotte, North Carolina, United States

- Code: USDC

- Description: Washington, District of Columbia, United States

- Code: USD

- Description: -ICESWAP Publication dates for ICE Swap rates based on USD-LIBOR rates

- Code: USD

- Description: -MUNI Publication dates for the USD-Municipal Swap Index

- Code: USDN

- Description: Denver, United States

- Code: USDT

- Description: Detroit, Michigan, United States

- Code: USGS

- Description: U.S. Government Securities Business Day (as defined in 2006 ISDA Definitions Section 1.11 and 2000 ISDA Definitions Section 1.11)

- Code: USHL

- Description: Honolulu, Hawaii, United States

- Code: USHO

- Description: Houston, United States

- Code: USLA

- Description: Los Angeles, United States

- Code: USMB

- Description: Mobile, Alabama, United States

- Code: USMN

- Description: Minneapolis, United States

- Code: USNY

- Description: New York, United States

- Code: USPO

- Description: Portland, Oregon, United States

- Code: USSA

- Description: Sacramento, California, United States

- Code: USSE

- Description: Seattle, United States

- Code: USSF

- Description: San Francisco, United States

- Code: USWT

- Description: Wichita, United States

- Code: UYMO

- Description: Montevideo, Uruguay

- Code: UZTA

- Description: Tashkent, Uzbekistan

- Code: VECA

- Description: Caracas, Venezuela

- Code: VGRT

- Description: Road Town, Virgin Islands (British)

- Code: VNHA

- Description: Hanoi, Vietnam

- Code: VNHC

- Description: Ho Chi Minh (formerly Saigon), Vietnam

- Code: YEAD

- Description: Aden, Yemen

- Code: ZAJO

- Description: Johannesburg, South Africa

- Code: ZMLU

- Description: Lusaka, Zambia

- Code: ZWHA

- Description: Harare, Zimbabwe

Methodology screen review code

- make name column bigger.

- do not show description and URI by default.

- use tabs in detail window

- show all meta-data in details window.

Librarian errors code

When there is a failure publishing a dataset we just see "failed" in the wizard without any further details. Server log file says:

2026-01-21 22:21:07.676351 [DEBUG] [ores.dq.service.publication_service] Publishing dataset: slovaris.currencies with artefact_type: Solvaris Currencies 2026-01-21 22:21:07.676381 [ERROR] [ores.dq.service.publication_service] Unknown artefact_type: Solvaris Currencies for dataset: slovaris.currencies 2026-01-21 22:21:07.676412 [ERROR] [ores.dq.service.publication_service] Failed to publish slovaris.currencies: Unknown artefact_type: Solvaris Currencies 2026-01-21 22:21:07.676437 [INFO] [ores.dq.service.publication_service] Publishing dataset: slovaris.country_flags (Solvaris Country Flag Images) 2026-01-21 22:21:07.676460 [DEBUG] [ores.dq.service.publication_service] Publishing dataset: slovaris.country_flags with artefact_type: Solvaris Country Flag Images 2026-01-21 22:21:07.676491 [ERROR] [ores.dq.service.publication_service] Unknown artefact_type: Solvaris Country Flag Images for dataset: slovaris.country_flags 2026-01-21 22:21:07.676518 [ERROR] [ores.dq.service.publication_service] Failed to publish slovaris.country_flags: Unknown artefact_type: Solvaris Country Flag Images 2026-01-21 22:21:07.676542 [INFO] [ores.dq.service.publication_service] Publishing dataset: slovaris.countries (Solvaris Countries) 2026-01-21 22:21:07.676566 [DEBUG] [ores.dq.service.publication_service] Publishing dataset: slovaris.countries with artefact_type: Solvaris Countries 2026-01-21 22:21:07.676592 [ERROR] [ores.dq.service.publication_service] Unknown artefact_type: Solvaris Countries for dataset: slovaris.countries 2026-01-21 22:21:07.676618 [ERROR] [ores.dq.service.publication_service] Failed to publish slovaris.countries: Unknown artefact_type: Solvaris Countries

To reproduce, change artefact type in codegen back to "Solvaris Currencies".

General session dialog code

At present we can't see a dialog with sessions for all users. We need to go to accounts to see a specific user session. We need to modify this dialog to be able to show either all sessions or sessions for a specific user.

Notes:

- it should be possible to kick out a user or selection of users.

- it should be possible to send a message to a user or all users.

- session icon is just a circle

- add paging support.

Issues with event viewer code

- no icon.

- can't filter by event type.

- always collect events in ring buffer. Search for story on this.

Add action type to trades code

Seems like FPML has some kind of trade activity like actions:

Improve tag support code

At present we are not tagging DQ entities very well. For example, crypto currencies should be tagged as both crypto and currencies, etc.

Also tags have duplicates, and versioning does not seem to be working:

ores_frosty_leaf=> select * from dq_tags_artefact_tbl; dataset_id | tag_id | version | name | description --------------------------------------+--------------------------------------+---------+----------------+--------------------------------------- 93d187a9-fa26-4569-ab26-18154b58c5c7 | 65bd2824-bd43-4090-9f1a-a97dfef529ca | 0 | flag | Country and region flag images c8912e75-9238-4f97-b8da-065a11b8bcc8 | 75ee83a7-2c54-448c-b073-8d68107d136e | 0 | cryptocurrency | Cryptocurrency icon images 30c0e0b8-c486-4bc1-a6f4-19db8fa691c9 | 2d3eace5-733c-47b3-b328-f99a358fe2a8 | 0 | currency | Currency reference data d8093e17-8954-4928-a705-4fc03e400eee | 71e0c456-94fb-4e44-83f1-33ae1139e333 | 0 | currency | Non-ISO currency reference data d3a4e751-ae30-497c-96b1-f727201d536b | c57ee434-bbc2-48c1-9a59-c9799e701288 | 0 | cryptocurrency | Cryptocurrency reference data 44ff5afd-a1b7-42d2-84a7-432092616c40 | d6453cf6-f23b-4f97-b600-51fcad21c8aa | 0 | geolocation | IP address geolocation reference data

We need a generic tags table and then a junction between say datasets and tags, etc. Delete all of the existing half-baked tags implementations. Also have a look at the story in backlog about tags and labels.

Authentication failed dialog does not have details code

At present we show the C++ exception:

Authentication failed: Failed to connect to server: Connection refused [system:111 at /home/marco/Development/OreStudio/OreStudio.local1/build/output/linux-clang-debug/vcpkg_installed/x64-linux/include/boost/asio/detail/reactive_socket_connect_op.hpp:97:37 in function 'static void boost::asio::detail::reactive_socket_connect_op<boost::asio::detail::range_connect_op<boost::asio::ip::tcp, boost::asio::any_io_executor, boost::asio::ip::basic_resolver_results<boost::asio::ip::tcp>, boost::asio::detail::default_connect_condition, boost::asio::detail::awaitable_handler<boost::asio::any_io_executor, boost::system::error_code, boost::asio::ip::basic_endpoint<boost::asio::ip::tcp>>>, boost::asio::any_io_executor>::do_complete(void *, operation *, const boost::system::error_code &, std::size_t) [Handler = boost::asio::detail::range_connect_op<boost::asio::ip::tcp, boost::asio::any_io_executor, boost::asio::ip::basic_resolver_results<boost::asio::ip::tcp>, boost::asio::detail::default_connect_condition, boost::asio::detail::awaitable_handler<boost::asio::any_io_executor, boost::system::error_code, boost::asio::ip::basic_endpoint<boost::asio::ip::tcp>>>, IoExecutor = boost::asio::any_io_executor]']

Add details button.

Ensure DQ dataset checks use code code

We are still checking for Name:

-- Get the flags dataset ID (for linking images) select id into v_flags_dataset_id from ores.dq_datasets_tbl where name = 'Country Flag Images' and subject_area_name = 'Country Flags' and domain_name = 'Reference Data' and valid_to = ores.utility_infinity_timestamp_fn(); if v_flags_dataset_id is null then raise exception 'Dataset not found: Country Flag Images'; end if;

Create subsets of datasets code

In some cases we may just want to publish a subset of a dataset. For example, Majors, G11, etc. Or maybe these are just separate datasets?

In fact that is what they are. Break apart the larger sets - in particular currencies, countries, cryptos.

Fix code review comments for librarian code

We ran out of tokens to address code review comments from:

Notes:

- check to see if the diagram is using the new message to retrieve dependencies.

- most countries don't seem to have flags. How and when is the mapping done? actually after a restart I can now see the flags. It seems we need to force a load.

- headers in data set are the same colour as rows. Make them different.

- crypto currencies sometimes overlap with countries and iso currencies. we should "namespace" them somehow to avoid having a country flag being overwritten by a crypto icon (happens with AE).

- when publish fails we can't see the errors.

Management of roles code

At present we have system level roles. This is not ideal, you may want to delete roles, add them etc. Do some analysis on the best way to implement these. We could have curated datasets for roles as well. Admin is the exception.

Notes:

- should be possible to see which accounts have what roles.

Publish history dialog is non-standard code

- always on top.

- no paging.

Add purge button to all entities code

We should be able to completely trash all data. We probably need a special permission for this but admin should be able to do it. Ideally all entity dialogs should have a purge action.

We should also have a "purge all" button which purges all data from all tables - ignores roles etc. This could be available on the data librarian.

Improve icon for methodology and dimensions code

At present we have icons which are not very sensible. For methodology we could use something that reminds one of a laboratory.

Clicking on connection should provide info code

- when never connected: nothing.

- when connected: server, bytes sent, received, status of connection.

- when disconnected: retries, disconnected since.

Add UI for bootstrapping from Qt code

At present we need to go to the shell to bootstrap. It would be easier if we just had a button in Qt for this. Actually we probably need a little wizard which takes you through choosing an initial population etc.

Some analysis on this via gemini:

- we should call this "Data Provisioning". The wizard could be the data provisioning wizard.

- way to improve data setup:

Large systems often use a "Vanilla vs. Flavoured" approach:

- The Vanilla Step: This is the Baseline Configuration. It is non-negotiable and contains ISO codes (Currencies/Countries) and FpML schemas.

- The Flavor Step: The user chooses a Vertical or Scenario.

"Apply Financial Services Template?" (Adds FpML specific catalogs).

"Apply IDES/Demo Dataset?" (Adds the fictional entities and trades).

- "Demonstration and Evaluation": it should be possible to load up the system in a way that it is ready to exercise all of it's functionality.

Add coloured icons code

At present we are using black and white icons. These are a bit hard to see. We should try the coloured ones and see if it improves readability.

Add a "is alive" message code

We need to brainstorm this. At present we can only tell if a server is there or not by connecting. It would be nice to give some visual indicator to the user that the server is not up as soon as the user types the host. this may not be a good idea.

Notes:

- could tell client if registration / sign-up is supported.

Change reason and categories need permissions code

We need some very specific permissions as these are reg-sensitive.

Change reason not requested on delete code

At present you can delete entities without providing a change reason.

Remember dialog sizes and positions code

At present we need to resize dialogs frequently. We should write this to QSettings.

Accounts need to have a human or robot field code

Look for correct terminology (actor type?).

Geo-location tests fail for some IP Addresses code

Error is probably happening because the range is not supposed to be used.